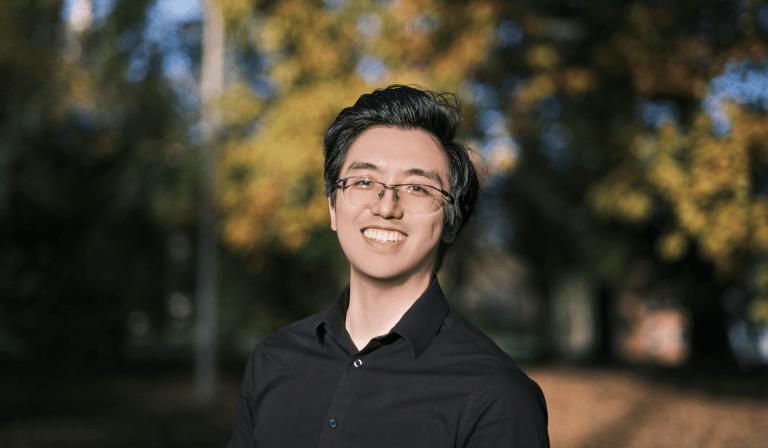

Dr. Yue Zhao is an Assistant Professor of Computer Science at the University of Southern California and a faculty member of the USC Machine Learning Center. He leads the FORTIS Lab (Foundations Of Robust Trustworthy Intelligent Systems), where his research addresses three connected levels: ensuring robust and trustworthy AI principles, applying structured and generative AI methods for scientific and societal applications, and developing scalable, open-source AI systems. His work applies to healthcare, finance, molecular science, and political science. Dr. Zhao has authored over 50 papers in top-tier venues and is recognized for his open-source contributions, including PyOD, PyGOD, TDC, and TrustLLM, which collectively have over 20,000 GitHub stars and 25 million downloads. His projects have been used by well-known organizations such as NASA, Morgan Stanley, and the U.S. Senate Committee on Homeland Security & Governmental Affairs. Dr. Zhao has received numerous awards, including the Capital One Research Awards, AAAI New Faculty Highlights Award, Google Cloud Research Innovators, Norton Fellowship, Meta AI4AI Research Award, and the CMU Presidential Fellowship. He also serves as an associate editor for IEEE Transactions on Neural Networks and Learning Systems (TNNLS), an action editor for the Journal of Data-centric Machine Learning Research (DMLR), and as an area chair for leading machine learning conferences.

In this talk, we will explore the challenges and advancements in outlier and out-of-distribution detection, which are critical for building robust AI systems. We will discuss various techniques and frameworks that have been developed to address these challenges, focusing on their applications in real-world scenarios.